CRC16-CCITT optimization for ARM Cortex M4

(Ooops) I did it again ! After doing the CRC32 optimization, I tried the same for CRC16-CCITT. This one is harder (but not so hard) to optimize for a C compiler because in modern CPU we mainly have 32 bits/64 bits registers, but for CRC16, we have to play with 16 bits values, split into upper and lower parts which need shift + mask operations.

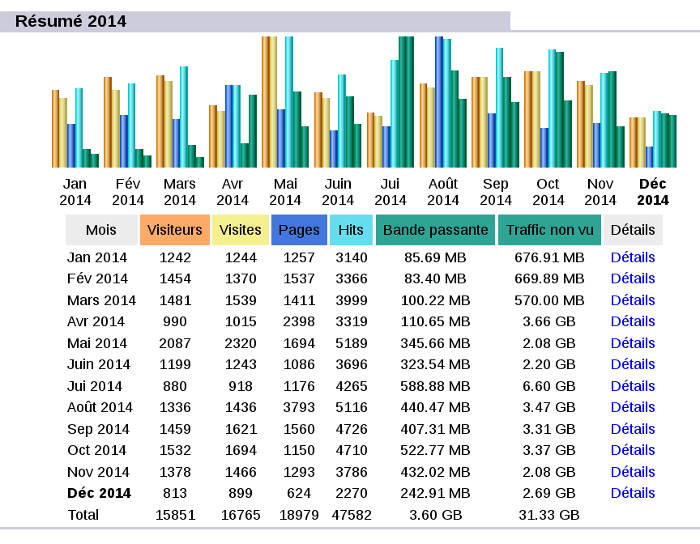

Whatever, context is the same : target is ARM Cortex M4, no data/instruction cache, SRAM memory and GCC 9. One interesting point is that this time, I didn't target armv6, but armv7 (we'll see why later). Figures are still impressive, with a gain between 50% and 60% !

- -Os compilation : 21.7 milliseconds

- -O2 compilation : 17.2 milliseconds

- -Os + optimizations : 8.8 milliseconds

I used the same optimization tricks than CRC32 (see article) plus this ones :

Use specific instruction if you can

ARMv7 instruction set provides thumb2 instructions which contains bitfield extraction. This is really really (yes 2 times) nice ! instruction ubfx (and variants) allows to extract a specified range of bits from a register and thus avoid to do shift + mask (2 instructions).

Be careful on instruction size

Thumb2 is really nice because you can mix 16 bits and 32 bits instructions. But, in order to save space (thus speed), you have to carefully choose your instructions. The case here is :

lsrs r5, r5, #24 C equivalent r5 = r5 >> 24

and

ubfx r5, r5, #24, #8 C equivalent r5 = (r5 & 0xff000000) >> 24

They both do have the same result but the first one is an "old" instruction and can be encoded on 16 bits while the second is new and is encoded into 32 bits.

Don't take care on unused register part

At some point, I do a 32 bits xor operation which generate random values on bits 31..15. But we don't care because we have to focus on 16 bits lower part.

Here is the optimized function. Whole C file can be found here. Optimization is effective for 16 bytes blocks (aligned).

uint16_t crc16_ccitt_opt16(

const unsigned char* block,

unsigned int blockLength,

uint16_t crc)

{

/* unsigned int i; */

/* for(i=0U; i<blockLength; i++){ */

/* uint16_t tmp = (crc >> 8) ^ (uint16_t) block[i]; */

/* crc = ((uint16_t)(crc << 8U)) ^ crc16_ccitt_table[tmp]; */

/* } */

/*

r0 -> s

r1 -> len

r2 -> crc16val

r3 -> crc16tab

r4 -> curval[0]

r5 -> (crc >> 8) ^ (uint16_t) block[i]

r6 -> crc16_ccitt_table[(crc >> 8) ^ (uint16_t) block[i])

r7 -> curval[1]

r8 -> curval[2]

r9 -> curval[3]

*/

__asm__ volatile (

"mov r0, %1\n"

"mov r1, %2\n"

"mov r2, %3\n"

"mov r3, %4\n"

"push {r7, r8, r9}\n"

"crc16_opt16_loop:\n"

"ldm r0!, {r4, r7, r8, r9}\n"

// curval[0]

"eor r5, r4, r2, lsr #8\n"

"uxtb r5, r5\n"

"ldrh r6, [r3, r5, lsl #1]\n"

"eor r2, r6, r2, lsl #8\n"

"eor r5, r4, r2\n"

"ubfx r5, r5, #8, #8\n\n"

"ldrh r6, [r3, r5, lsl #1]\n"

"eor r2, r6, r2, lsl #8\n"

"eor r5, r4, r2, lsl #8\n"

"ubfx r5, r5, #16, #8\n\n"

"ldrh r6, [r3, r5, lsl #1]\n"

"eor r2, r6, r2, lsl #8\n"

"eor r5, r4, r2, lsl #16\n"

"lsrs r5, r5, #24\n\n"

"ldrh r6, [r3, r5, lsl #1]\n"

"eor r2, r6, r2, lsl #8\n"

// curval[1]

"eor r5, r7, r2, lsr #8\n"

"uxtb r5, r5\n"

"ldrh r6, [r3, r5, lsl #1]\n"

"eor r2, r6, r2, lsl #8\n"

"eor r5, r7, r2\n"

"ubfx r5, r5, #8, #8\n\n"

"ldrh r6, [r3, r5, lsl #1]\n"

"eor r2, r6, r2, lsl #8\n"

"eor r5, r7, r2, lsl #8\n"

"ubfx r5, r5, #16, #8\n\n"

"ldrh r6, [r3, r5, lsl #1]\n"

"eor r2, r6, r2, lsl #8\n"

"eor r5, r7, r2, lsl #16\n"

"lsrs r5, r5, #24\n\n"

"ldrh r6, [r3, r5, lsl #1]\n"

"eor r2, r6, r2, lsl #8\n"

// curval[2]

"eor r5, r8, r2, lsr #8\n"

"uxtb r5, r5\n"

"ldrh r6, [r3, r5, lsl #1]\n"

"eor r2, r6, r2, lsl #8\n"

"eor r5, r8, r2\n"

"ubfx r5, r5, #8, #8\n\n"

"ldrh r6, [r3, r5, lsl #1]\n"

"eor r2, r6, r2, lsl #8\n"

"eor r5, r8, r2, lsl #8\n"

"ubfx r5, r5, #16, #8\n\n"

"ldrh r6, [r3, r5, lsl #1]\n"

"eor r2, r6, r2, lsl #8\n"

"eor r5, r8, r2, lsl #16\n"

"lsrs r5, r5, #24\n\n"

"ldrh r6, [r3, r5, lsl #1]\n"

"eor r2, r6, r2, lsl #8\n"

// curval[3]

"eor r5, r9, r2, lsr #8\n"

"uxtb r5, r5\n"

"ldrh r6, [r3, r5, lsl #1]\n"

"eor r2, r6, r2, lsl #8\n"

"eor r5, r9, r2\n"

"ubfx r5, r5, #8, #8\n\n"

"ldrh r6, [r3, r5, lsl #1]\n"

"eor r2, r6, r2, lsl #8\n"

"eor r5, r9, r2, lsl #8\n"

"ubfx r5, r5, #16, #8\n\n"

"ldrh r6, [r3, r5, lsl #1]\n"

"eor r2, r6, r2, lsl #8\n"

"eor r5, r9, r2, lsl #16\n"

"lsrs r5, r5, #24\n\n"

"ldrh r6, [r3, r5, lsl #1]\n"

// Last two lines inverted

"subs r1, r1, #16\n"

"eor r2, r6, r2, lsl #8\n"

"bne crc16_opt16_loop\n"

"pop {r7, r8, r9}\n"

"strh r2, %0\n"

: "=m" (crc)

: "r" (block), "r" (blockLength), "r" (crc), "r" (crc16_ccitt_table)

// Missing r7-r9, manually save it

: "r0", "r1", "r2", "r3", "r4", "r5", "r6"

);

return crc;

}

We can see that computation is not the same for all parts of the 32 bits register while it was really symmetric in CRC32.

Code has to be compiled with minimum -O1 or -Os option

For comparison, the (quite good) code generated by GCC 12 with -Os, working on a single byte :

594: 428b cmp r3, r1

596: d100 bne.n 59a <crc16_ccitt+0x12>

598: bd30 pop {r4, r5, pc}

59a: f813 2b01 ldrb.w r2, [r3], #1

59e: 0204 lsls r4, r0, #8

5a0: b2a4 uxth r4, r4

5a2: ea82 2210 eor.w r2, r2, r0, lsr #8

5a6: f835 2012 ldrh.w r2, [r5, r2, lsl #1]

5aa: ea84 0002 eor.w r0, r4, r2

5ae: e7f1 b.n 594 <crc16_ccitt+0xc>

It's clearly focus on Armv6 compatibility as it use masking operation + shift at lines 59e and 5a0.